Encoding issues occur when different computer systems interpret text characters differently. All systems store text as numbers (bytes), but conflicting encoding standards like UTF-8, ISO-8859-1, or CP-1252 map these numbers to different letters or symbols. This causes garbled text ("mojibake") when files move between platforms like Windows (often uses UTF-16/ANSI), Linux/macOS (primarily UTF-8), or web browsers (usually UTF-8), as each may assume a different default encoding for interpreting bytes.

For instance, a CSV file created on Windows using CP-1252 encoding might display accented characters (like é) incorrectly when opened in Linux, expecting UTF-8, showing symbols like é. Similarly, text data retrieved from an older database using ISO-8859-1 might render incorrectly on a modern UTF-8 encoded website. Tools like text editors (Notepad++, VS Code) and programming languages (Python's chardet library, iconv command line tool) help identify and convert encodings.

Modern systems increasingly standardize on UTF-8, which supports all characters globally. Handling requires consciously specifying encodings consistently: declare UTF-8 in file headers (like # -*- coding: utf-8 -*- in Python), web pages (<meta charset="utf-8">), and during data transfers. Testing files across target platforms is essential. While legacy systems using older encodings remain a challenge, adopting UTF-8 universally minimizes these issues and ensures global accessibility.

How do I handle encoding issues across platforms?

Encoding issues occur when different computer systems interpret text characters differently. All systems store text as numbers (bytes), but conflicting encoding standards like UTF-8, ISO-8859-1, or CP-1252 map these numbers to different letters or symbols. This causes garbled text ("mojibake") when files move between platforms like Windows (often uses UTF-16/ANSI), Linux/macOS (primarily UTF-8), or web browsers (usually UTF-8), as each may assume a different default encoding for interpreting bytes.

For instance, a CSV file created on Windows using CP-1252 encoding might display accented characters (like é) incorrectly when opened in Linux, expecting UTF-8, showing symbols like é. Similarly, text data retrieved from an older database using ISO-8859-1 might render incorrectly on a modern UTF-8 encoded website. Tools like text editors (Notepad++, VS Code) and programming languages (Python's chardet library, iconv command line tool) help identify and convert encodings.

Modern systems increasingly standardize on UTF-8, which supports all characters globally. Handling requires consciously specifying encodings consistently: declare UTF-8 in file headers (like # -*- coding: utf-8 -*- in Python), web pages (<meta charset="utf-8">), and during data transfers. Testing files across target platforms is essential. While legacy systems using older encodings remain a challenge, adopting UTF-8 universally minimizes these issues and ensures global accessibility.

Related Recommendations

Quick Article Links

Can conflicting file timestamps trigger duplication?

File timestamps record the creation, modification, or last access time of a file. Conflicting timestamps occur when diff...

How do I know which format my printer supports?

Determining your printer's supported formats means identifying the file types it can process, such as PDF, JPG, or DOCX....

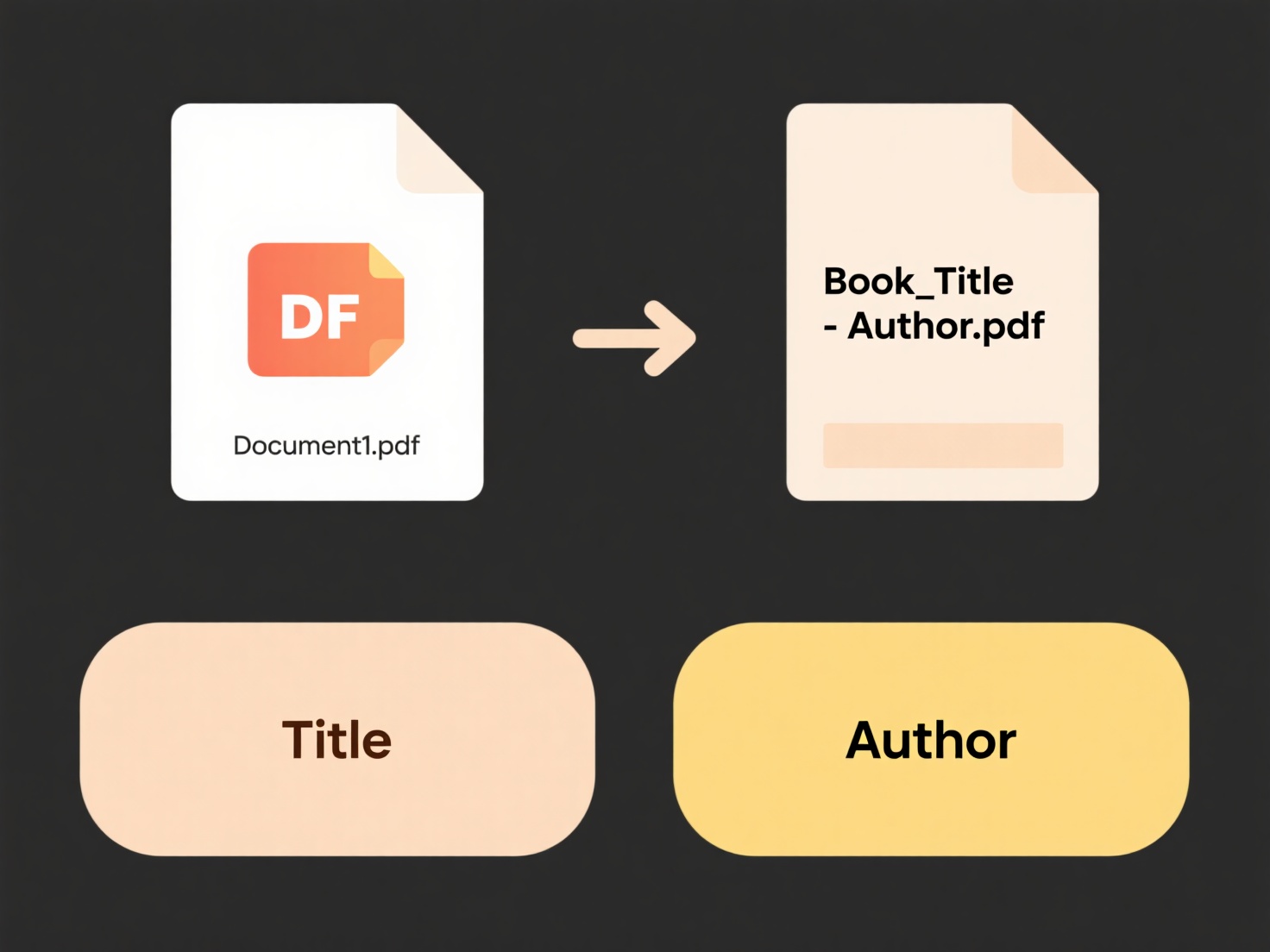

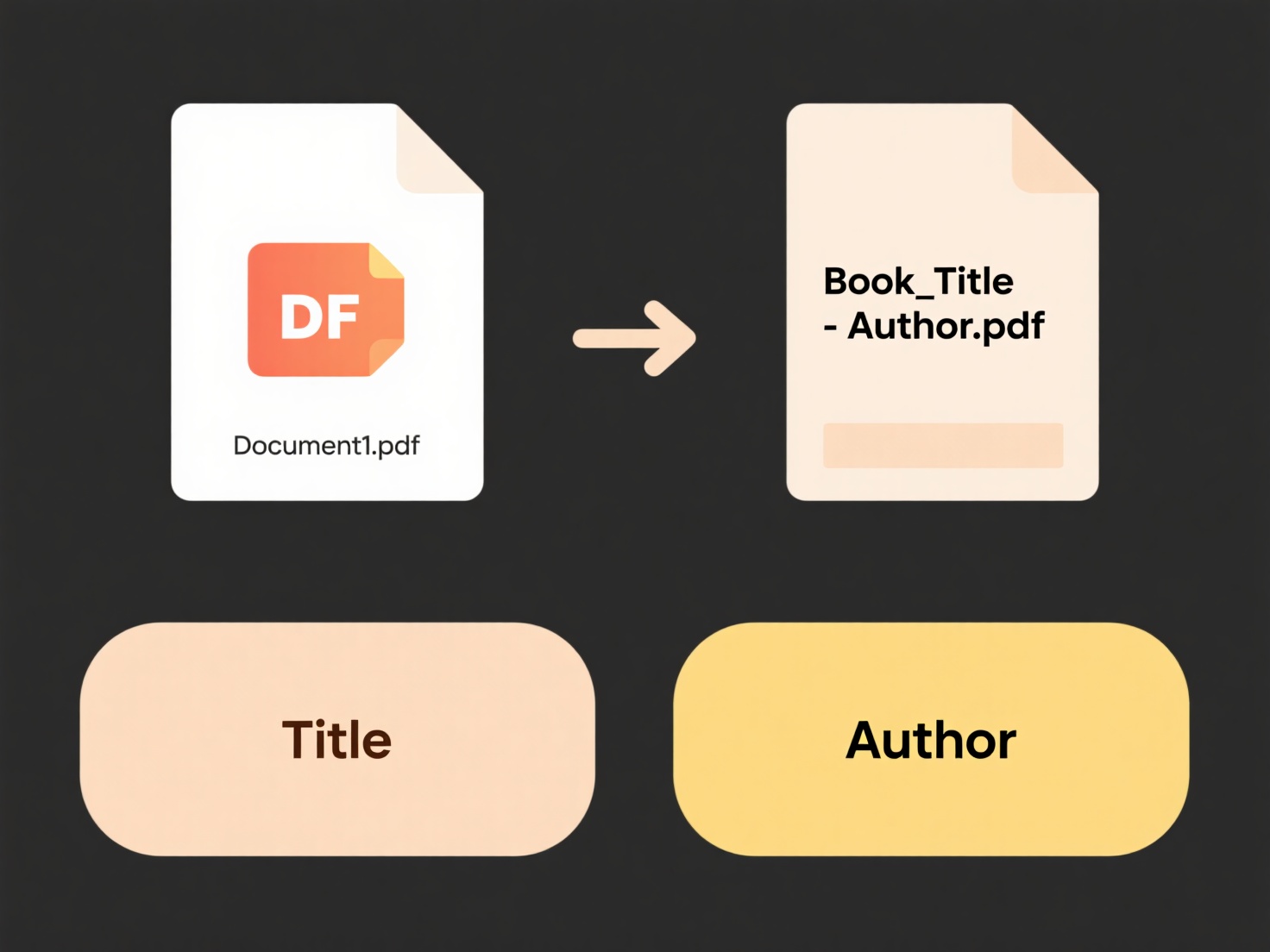

How do I exclude files from batch rename by keyword?

Batch renaming changes multiple filenames simultaneously using defined rules. Excluding files by keyword involves specif...